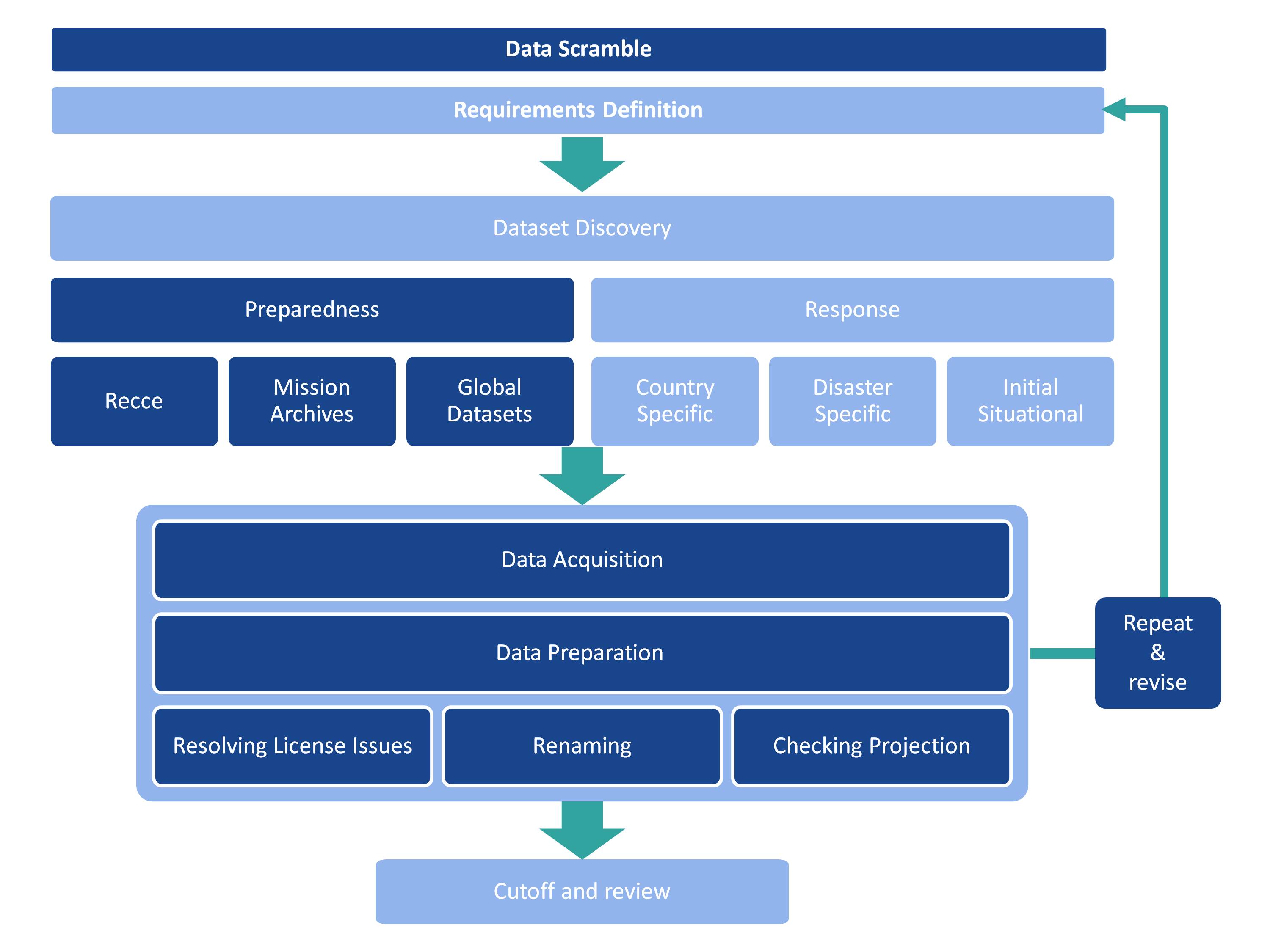

This is the process by which data and information is gathered whilst an individual or organisation is mobilising after the trigger event – sometimes called the crash move phase. The purpose of the scramble is to obtain data and information that may be needed during the initial phase of a response. As internet connections can often be poor or non-existent early on, deploying with some data to begin with is vital. Data scrambles should be intelligence led; aiming to anticipate what might be needed in the future and not just for the initial mapping.

This is the process by which data and information is gathered whilst an individual or organisation is mobilising after the trigger event – sometimes called the crash move phase. The purpose of the scramble is to obtain data and information that may be needed during the initial phase of a response. As internet connections can often be poor or non-existent early on, deploying with some data to begin with is vital. Data scrambles should be intelligence led; aiming to anticipate what might be needed in the future and not just for the initial mapping.

This is typically, but not exclusively, basemap and baseline data. Some early situational data may be acquired. It should include the following steps.

Requirements definition

Based on what is known in the early stages, the aim is to established the data necessary to mount an effective humanitarian response and should include:

- Disaster overview - establish the facts of the disaster from Office for the Coordination of Humanitarian Affairs (OCHA) reports, ACAPS and other publishing services, including media outlets.

- Area of interest - where is the disaster and what is its geographical reach? Which part of the country is in, or is it over single or multiple countries? What additional data is required to put the affected country in context when mapping – this might mean that some data (primarily basemap data) from neighbouring countries may be required.

Dataset discovery

Establishing what data is available. Using any preparedness work, make contact with people and organisations, check your post-mission archives.

- Data preparedness - check your data preparedness activities and see if there are data and contacts available.

- Post mission archives - check for data from previous responses. There may be good datasets, particularly basemap and baseline, available.

- Humanitarian Data Exchange - this may have Common Operational Datasets (CODs), data that all responders should try and use, for the area of interest.

- Organisational and individual contacts - these may be primarily based on contacts established during any preparedness work or a simple internet search.

- Situational data - can be gained from situational reports from lead organisations such as OCHA, GDACS, IFRC or ReliefWeb, as well as both national and international news reports.

Data acquisition

The actual process of downloading or obtaining the data. Beyond saving it to an appropriate location, it is useful to keep an original copy of the files. See Preparation for further details.

Check all aspects of the acronym CUSTARD, which stands for: coordinate system; units; source; triangulate; absent values; restrictions; date. See receiving data in the field for more details.

Resolve usage issues - understanding what, if any, limitations of using the data might be. There might be license agreements with providers that need to be signed or agreed. Data may be limited to particular uses, and this should be recorded.

Data preparation

The process for making the data ready for use. See the sections on Data naming conventions and Folder structures, which describe the reasons and process of data management.

- If you haven’t done so, save a copy of the data in its original format. This means that if you later have an issue or make a mistake, you can roll back to an original version.

- Make sure the data opens in the correct software.

- For GIS data.

- Make sure it opens in ArcGIS or QGIS.

- Check the projection and coordinate system. If required re-project. Record the projection and coordinate system in a metadata file and your data scramble spreadsheet.

- Clip to the area of interest.

- For spreadsheet data.

- Make sure it opens in Excel.

- Look at cleaning the data so it can be used in joins (look at column headers, remove merged cells, etc) for use in GIS. A clean spreadsheet also allows for created manipulation and analysis of the data through pivot tables and filters.

- If there are coordinates in the data, create a shapefile from it for use in a GIS platform.

- For GIS data.

- Save a copy of the processed data to a working folder using the appropriate data naming convention.

- Fill out any additional notes and metadata in your data scramble spreadsheet.

Review

The data should be periodically reviewed to establish any gaps, and to adjust the requirements definition as needed, for example if the area of interest becomes bigger, or there is a significant aftershock. This could be every few hours or every couple of days depending on the speed of the scramble and response.

Final cutoff

This may happen if you are moving in to the affected area and need to make sure you or your organisation have all the data stored locally. It may be that the scramble continues after the final cutoff, but at this stage it should be looking for a few very specific datasets rather than lots of data that may be hard to locate in the field. It is useful to include a summary of the scramble including:

- what data has been acquired – include what information was found, but also summarise the gaps

- recommendations – is there one preferred dataset over another?

- license information - what is the license terms for the data.

- known issues – e.g. some data isn’t as accurate as others; geographical administration boundaries don’t all align to each other.